Apple has developed a system dubbed neuralMatch to locate child sexual abuse material/CSAM, and for now, it will scan iPhones of users in the United States.

The perks of the internet are quite obvious and known to all but as they say “with every blessing comes a curse.” Similarly, the digital boom has brought along various concerns, online child exploitation being one.

The number of pedophiles lurking around the internet trying to exploit innocent and vulnerable children for their evil acts is increasing day by day. Parents are increasingly worried and even panicking because of the growing rate of online child sexual abuse.

Reportedly, Apple will be launching a new machine learning-based system to be released later this year as part of the upcoming versions of iOS and iPadOS to ensure enhanced child safety.

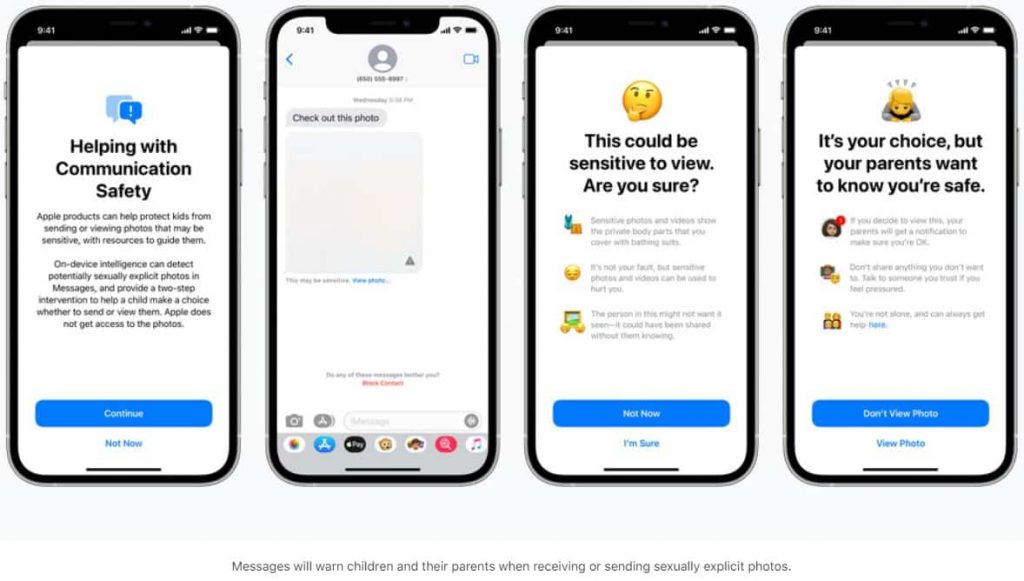

The system will scan iPhones across the USA to detect the presence of child sexual abuse images. Moreover, the messages app in iPhones will issue a warning whenever a child receives or tries to send sexually explicit images.

About the New CSAM System

Apple has developed a system dubbed neuralMatch to locate child sexual abuse material/CSAM, and it will scan US customers’ devices.

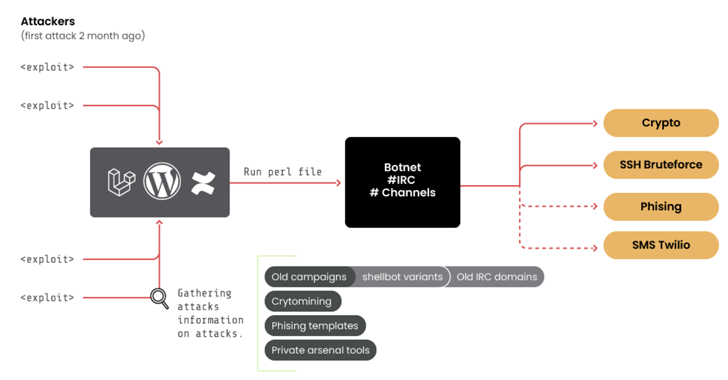

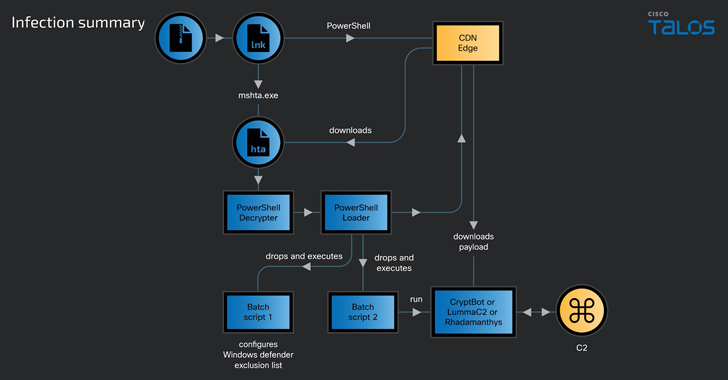

The system will be launched soon amidst reservations from the cybersecurity fraternity as they claim authoritative governments could misuse the system to perform surveillance on their citizens.

SEE: Microsoft’s new tool detects & reports pedophiles from online chats

However, Apple claims that the system is a new “application of cryptography” to limit the distribution of CSAM online without impacting user privacy.

How NeuralMatch Works?

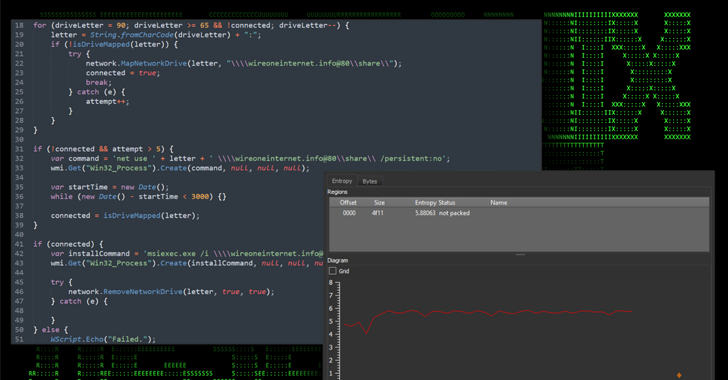

The new system will find matches for already known/available CSAM before storing an image in iCloud Photos. If a match is found, a human reviewer will conduct a thorough assessment and notify the National Center for Missing and Exploited Children about that user, and the account will be disabled.

“This innovative new technology allows Apple to provide valuable and actionable information to NCMEC and law enforcement regarding the proliferation of known CSAM,” Apple noted.

“Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes,” and the system has “extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account,” the company wrote.

Could It be Exploited?

Critics of this system have raised concerns that this technology might be expanded for scanning phones for banned/prohibited content or political views/speech. In addition to this, Apple is planning to scan users’ encrypted messages to locate sexually explicit content as a separate child safety measure.

It is worth noting that the detection system will flag images of child pornography already present in the center’s database. So, parents need not worry about it.

According to John Hopkins University’s top cryptography researcher, Matthew Green, this system can be used to target and trap innocent people by sending them objectionable images to trigger a match with child porn images. Apple’s algorithm could be fooled easily, and anyone could be framed because such systems are easily tricked.

“Researchers have been able to do this pretty easily. What happens when the Chinese government says, ‘Here is a list of files that we want you to scan for… Does Apple say no? I hope they say no, but their technology won’t say no,” Green stated.

Here’s what Edward Snowden has to say about the new development:

No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

They turned a trillion dollars of devices into iNarcs—*without asking.* https://t.co/wIMWijIjJk

— Edward Snowden (@Snowden) August 6, 2021